Canary Deployment in Kubernetes (Part 2) — Automated Canary Deployment using Argo Rollouts

Table of Contents

Canary Deployment in Kubernetes (Part 2) — Automated Canary Deployment using Argo Rollouts

Table of Contents

Deploying to production in Kubernetes can be quite stressful. Even after meaningful and reliable automated tests have successfully passed, there is still room for things to go wrong and lead to a nasty incident when pressing the final button.

Thankfully, Kubernetes is made to be resilient to this kind of scenario, and rolling back is a no-brainer. But still, rolling back means that, at least for some time, all of the users were negatively impacted by the faulty change…

What if we could smoke test our change in production before it actually hits real users? What if we could roll out a change incrementally to some users instead of all of them at once? What if we could detect a faulty deployment and roll it back automatically?

Well, that, my friend, is what Canary Deployment is all about!

Minimizing the impact on real users while deploying a risky change to production.

🎬 Hi there, I’m Jean!

In this 3 parts series, we’re going to explore several ways to do Canary Deployment in Kubernetes, and the first one is…

🥁

… using Argo Rollouts! 🎊

Before we start, make sure you have the following tools installed:

Note: for MacOS users or Linux users using Homebrew, simply run:

brew install kind kubectl argoproj/tap/kubectl-argo-rollouts helm k6

All set? Let’s go! 🏁

Kind is a tool for running local Kubernetes clusters using Docker container “nodes”. It was primarily designed for testing Kubernetes itself, but may be used for local development or CI.

I don’t expect you to have a demo project in handy, so I built one for you.

git clone https://github.com/jhandguy/canary-deployment.git

cd canary-deployment

Alright, let’s spin up our Kind cluster! 🚀

➜ kind create cluster --image kindest/node:v1.27.3 --config=kind/cluster.yaml

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.27.3) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

Argo Rollouts is a Kubernetes controller and set of CRDs which provide advanced deployment capabilities to Kubernetes such as blue-green, canary, canary analysis, experimentation, and progressive delivery.

In combination with the NGINX Ingress Controller, Argo Rollouts achieves the same as a Simple Canary Deployment using Ingress NGINX, but better.

If you haven’t already, go read Canary Deployment in Kubernetes (Part 1) — Simple Canary Deployment using Ingress NGINX and learn how to implement a Simple Canary Deployment using Ingress NGINX!

NGINX Ingress Controller is one of the many available Kubernetes Ingress Controllers, which acts as a load balancer and satisfies routing rules specified in Ingress resources, using the NGINX reverse proxy.

NGINX Ingress Controller can be installed via its Helm chart.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm install ingress-nginx/ingress-nginx --name-template ingress-nginx --create-namespace -n ingress-nginx --values kind/ingress-nginx-values.yaml --version 4.8.3 --wait

Now, if everything goes according to plan, you should be able to see the ingress-nginx-controller Deployment running.

➜ kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 1/1 1 1 4m35s

Argo Rollouts can be installed via its Helm chart.

helm repo add argo https://argoproj.github.io/argo-helm

helm install argo/argo-rollouts --name-template argo-rollouts --create-namespace -n argo-rollouts --set dashboard.enabled=true --version 2.32.5 --wait

If all goes well, you should see two newly spawned Deployments with the READY state.

➜ kubectl get deploy -n argo-rollouts

NAME READY UP-TO-DATE AVAILABLE AGE

argo-rollouts 1/1 1 1 13m

argo-rollouts-dashboard 1/1 1 1 13m

One of the limitations of using Ingress NGINX alone is that it requires the duplication of quite a few Kubernetes resources. If you remember in Part 1, the Deployment, Service, and Ingress resources were almost identically cloned.

With Argo Rollouts, the duplication of Kubernetes resources is reduced to only one: the Service resource.

Another limitation of using plain Ingress NGINX is that the incremental rollout of the Canary Deployment (via canary-weight) has to be done manually by annotating the canary Ingress.

Argo Rollouts created its own Custom Resource to remediate that problem: the Rollout resource.

Alrighty then! Let’s get to it! 🧐

helm install sample-app/helm-charts/argo-rollouts --name-template sample-app --create-namespace -n sample-app --wait

If everything goes fine, you should eventually see one Rollout with the READY state.

➜ kubectl get rollout sample-app -n sample-app

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE

sample-app 1 1 1 1

Alright, let’s take a look at what’s under all this!

➜ ls -1 sample-app/helm-charts/argo-rollouts/templates

analysistemplate.yaml

canary

ingress.yaml

rollout.yaml

service.yaml

serviceaccount.yaml

servicemonitor.yaml

➜ ls -1 sample-app/helm-charts/argo-rollouts/templates/canary

service.yaml

Note: ignore the

analysistemplate.yamland theservicemonitor.yamlfor now and focus on the rest of the resources.

As you can see, there is only one resource that has been duplicated in the canary folder: the service.yaml.

Now you might wonder, where did the Deployment resource go?! 🤔

In Argo Rollouts, the Deployment resource has been replaced with the Rollout resource. It is pretty much the same though, except it contains some extra specifications.

In fact, if you compare the deployment.yaml from Part 1 with the rollout.yaml, you will find that they are very similar, except for the strategy spec which is very unique to the Rollout resource.

---

apiVersion: argoproj.io/v1alpha1

kind: Rollout

...

spec:

...

strategy:

canary:

canaryService: {{ .Release.Name }}-canary

stableService: {{ .Release.Name }}

canaryMetadata:

labels:

deployment: canary

stableMetadata:

labels:

deployment: stable

trafficRouting:

nginx:

stableIngress: {{ .Release.Name }}

additionalIngressAnnotations:

canary-by-header: X-Canary

steps:

- setWeight: 25

- pause: {}

- setWeight: 50

- pause:

duration: 5m

- setWeight: 75

- pause:

duration: 5m

...

Now, let’s break down what is happening here:

canaryService and which is the stableService so it can update them to select their corresponding pods on the fly;canaryMetadata and stableMetadata;stableIngress so it can create automatically the canary Ingress from it;canary-weight annotation, the Argo Rollouts Controller will be able to do it for us automatically, thanks to the steps specification.A step can be one of three things:

pause: this will put the Rollout in a Paused state. It can either be a timed pause (i.e. duration: 5m) or an indefinite pause, until it is manually resumed;setWeight: this will increase the canary Ingress’s canary-weight annotation to the desired weight (i.e. setWeight: 25);setCanaryScale: this will increase the number of replicas or pods which are selected by the canary Service (i.e. setCanaryScale: 4).Awesome! Now that our Rollout is up and we understand how it works under the hood, let’s take it for a spin! 🚗

But first, let’s have a look at the dashboard!

kubectl argo rollouts dashboard -n argo-rollouts &

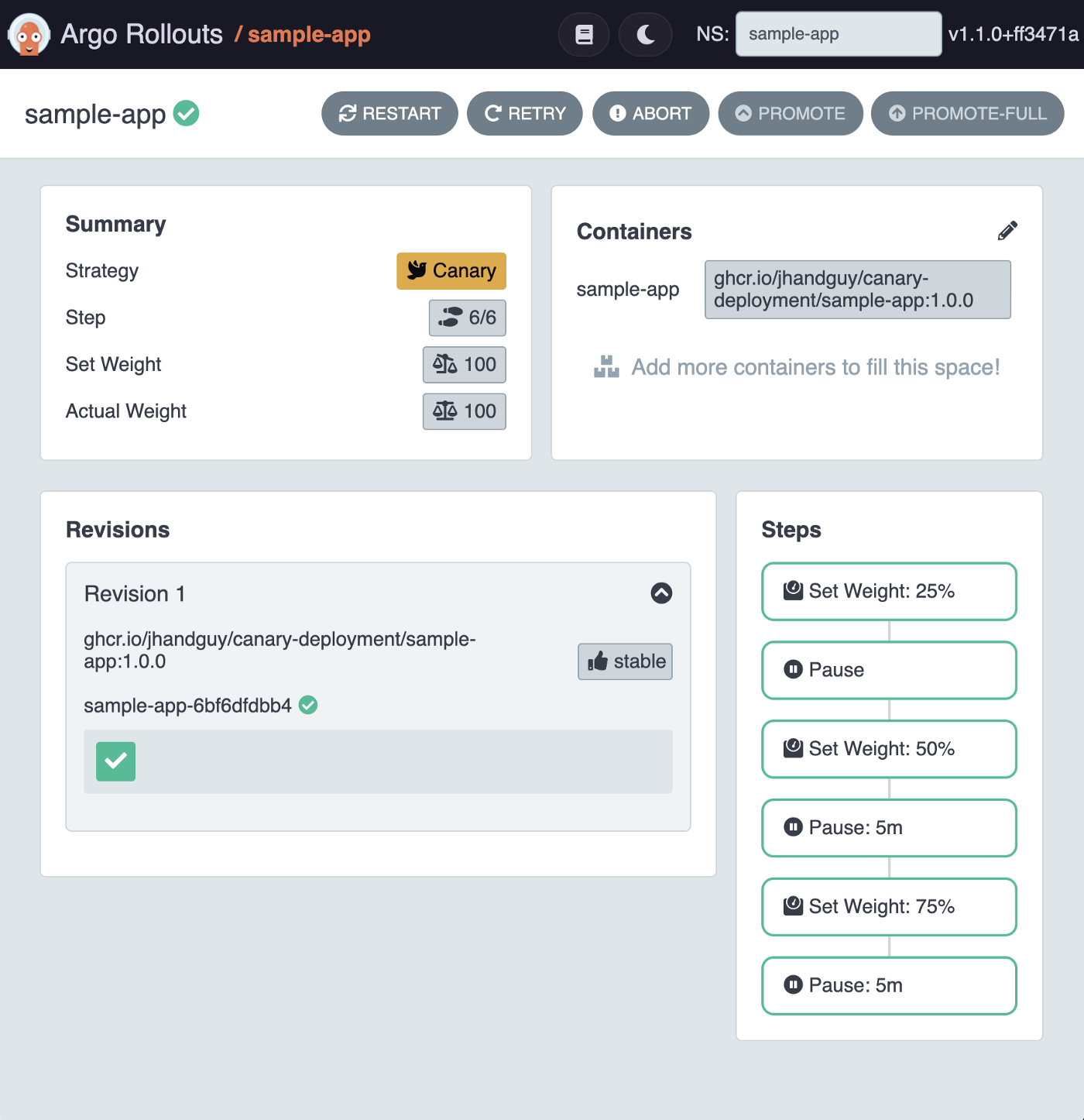

If you now head to http://localhost:3100/rollout/sample-app, you should see a shiny dashboard showing the state of the sample-app Rollout.

Cool! So far, we can observe only one revision labeled as stable, serving 100% of the traffic.

Now, let’s set a new image for the container and see what happens!

kubectl argo rollouts set image sample-app sample-app=ghcr.io/jhandguy/canary-deployment/sample-app:latest -n sample-app

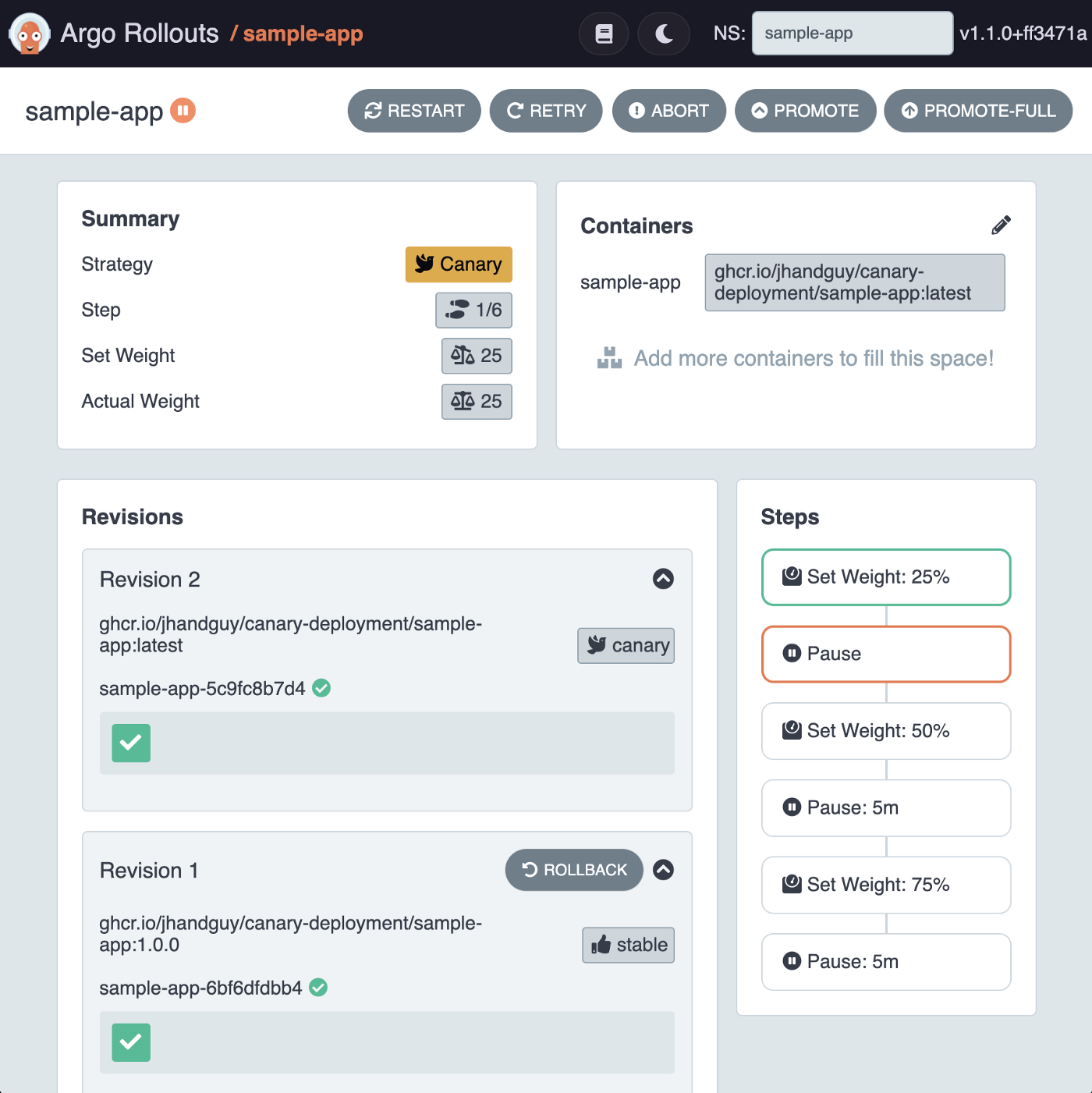

Aha! A new revision has been created and was labeled as canary. The weight for the canary Ingress has been set to 25% and the Rollout has now entered the Paused state.

Let’s verify this real quick!

➜ kubectl get ingress -n sample-app

NAME CLASS HOSTS ADDRESS ...

sample-app <none> sample.app localhost ...

sample-app-sample-app-canary <none> sample.app localhost ...

➜ kubectl describe ingress sample-app-sample-app-canary -n sample-app

...

Annotations: kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-by-header: X-Canary

nginx.ingress.kubernetes.io/canary-weight: 25

...

Indeed! An extra Ingress resource was automatically created, and given a canary-weight of 25.

Let’s see if it works!

curl localhost/success -H "Host: sample.app"

As you can see, out of 4 requests, one came back from the canary pod.

➜ curl localhost/success -H "Host: sample.app"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-6bf6dfdbb4-xmqdw","deployment":"stable"}

➜ curl localhost/success -H "Host: sample.app"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-6bf6dfdbb4-xmqdw","deployment":"stable"}

➜ curl localhost/success -H "Host: sample.app"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-6bf6dfdbb4-xmqdw","deployment":"stable"}

➜ curl localhost/success -H "Host: sample.app"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-5c9fc8b7d4-wdrck","deployment":"canary"}

And, the same way as in Part 1, we can easily Smoke Test our change in isolation by leveraging the Ingress canary-by-header annotation.

curl localhost/success -H "Host: sample.app" -H "X-Canary: always"

Aha! We are now consistently hitting the canary pod.

➜ curl localhost/success -H "Host: sample.app" -H "X-Canary: always"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-5c9fc8b7d4-wdrck","deployment":"canary"}

➜ curl localhost/success -H "Host: sample.app" -H "X-Canary: always"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-5c9fc8b7d4-wdrck","deployment":"canary"}

➜ curl localhost/success -H "Host: sample.app" -H "X-Canary: always"

{"node":"kind-control-plane","namespace":"sample-app","pod":"sample-app-5c9fc8b7d4-wdrck","deployment":"canary"}

Now we’ve made a few requests, let’s see how it behaves under load and whether or not it indeed guarantees a rough 25/75 traffic split!

For Load Testing, I really recommend k6 from the Grafana Labs team. It is a dead-simple yet super powerful tool with very extensive documentation.

See for yourself!

k6 run k6/script.js

After about 1 minute, k6 should be done executing the load test and show you the results.

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: k6/script.js

output: -

scenarios: (100.00%) 1 scenario, 20 max VUs, 1m30s max duration (incl. graceful stop):

* load: Up to 20.00 iterations/s for 1m0s over 2 stages (maxVUs: 20, gracefulStop: 30s)

✓ status code is 200

✓ node is kind-control-plane

✓ namespace is sample-app

✓ pod is sample-app-*

✓ deployment is stable or canary

✓ checks.........................: 100.00% ✓ 3100 ✗ 0

data_received..................: 157 kB 2.6 kB/s

data_sent......................: 71 kB 1.2 kB/s

http_req_blocked...............: avg=42.6µs min=2µs med=15µs max=2.44ms p(90)=23µs p(95)=36.29µs

http_req_connecting............: avg=20.73µs min=0s med=0s max=1.49ms p(90)=0s p(95)=0s

✓ http_req_duration..............: avg=4.73ms min=829µs med=4.09ms max=36.81ms p(90)=7.89ms p(95)=10.14ms

{ expected_response:true }...: avg=4.73ms min=829µs med=4.09ms max=36.81ms p(90)=7.89ms p(95)=10.14ms

http_req_failed................: 0.00% ✓ 0 ✗ 620

http_req_rate..................: 50.00% ✓ 620 ✗ 620

✓ { deployment:canary }........: 28.70% ✓ 178 ✗ 442

✓ { deployment:stable }........: 71.29% ✓ 442 ✗ 178

http_req_receiving.............: avg=129.17µs min=21µs med=128µs max=2.05ms p(90)=186µs p(95)=214.04µs

http_req_sending...............: avg=65.53µs min=11µs med=62µs max=1.96ms p(90)=92.1µs p(95)=104.04µs

http_req_tls_handshaking.......: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_waiting...............: avg=4.53ms min=774µs med=3.88ms max=36.6ms p(90)=7.7ms p(95)=9.73ms

http_reqs......................: 620 10.333024/s

iteration_duration.............: avg=5.4ms min=1.07ms med=4.81ms max=38.46ms p(90)=8.76ms p(95)=10.76ms

iterations.....................: 620 10.333024/s

vus............................: 0 min=0 max=1

vus_max........................: 20 min=20 max=20

running (1m00.0s), 00/20 VUs, 620 complete and 0 interrupted iterations

load ✓ [======================================] 00/20 VUs 1m0s 00.71 iters/s

That sounds about right!

Out of 620 requests, 178 (28%) were served by the canary Deployment while 442 (72%) were served by the stable one. Pretty good!

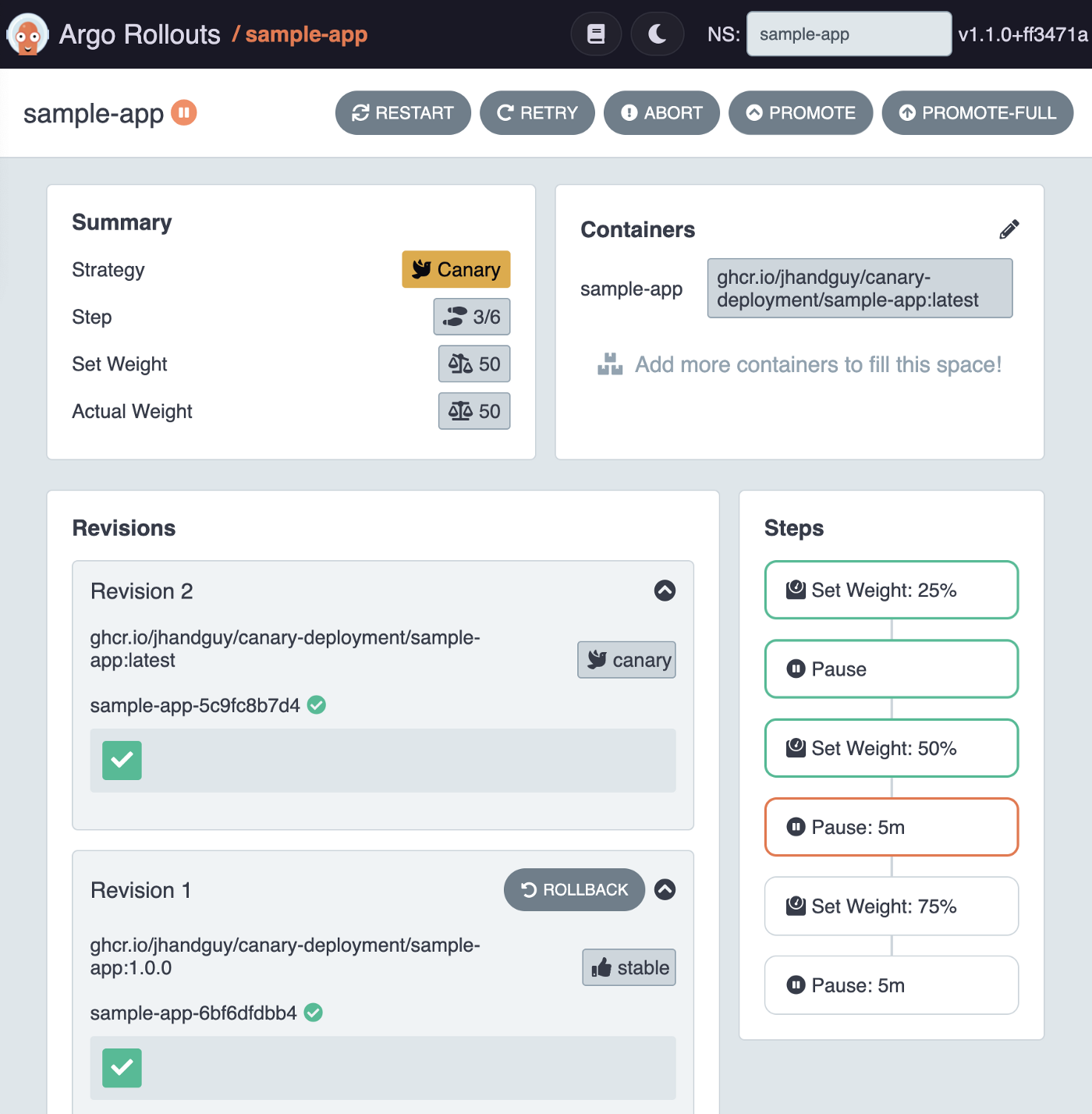

Once feeling confident enough to proceed to the next step, we can now promote our Rollout, either from the dashboard via the PROMOTE button, or via the Kubectl Plugin.

kubectl argo rollouts promote sample-app -n sample-app

Note: while the rollout is ongoing, keep firing some curl requests or run the k6 Load Test script again to observe the change in traffic distribution!

And if feeling so confident that we want to skip all the Rollout steps and get straight to 100% canary traffic, then we can fully promote the Rollout, also either from the dashboard via the PROMOTE-FULL button, or from the command line.

kubectl argo rollouts promote sample-app -n sample-app --full

But if on the contrary, we found an issue while in the middle of rolling out a change, then we can easily abort the Rollout and return to 100% stable traffic, either from the dashboard via the ABORT button, or from the CLI.

kubectl argo rollouts abort sample-app -n sample-app

Finally, once the Rollout is complete and if something were to go wrong, just like one would do with a standard Deployment, we can easily undo a Rollout and roll it back to the previous revision, also either from the dashboard via the ROLLBACK button, or via Kubectl.

kubectl argo rollouts undo sample-app -n sample-app

That’s it! You can now delete your Kind cluster.

kind delete cluster

To summarize, using Argo Rollouts we were able to:

steps, which would otherwise have to be done manually by editing the canary Ingress annotation directly.Was it worth it? Did that help you understand how to implement Canary Deployment in Kubernetes using Argo Rollouts?

If so, follow me on X, I’ll be happy to answer any of your questions and you’ll be the first one to know when a new article comes out! 👌

See you next week, for Part 3 of my series Canary Deployment in Kubernetes!

Bye-bye! 👋